SSL_shutdown() shuts down an active TLS/SSL connection. It sends the close_notify shutdown alert to the peer.

SSL_shutdown() tries to send the close_notify shutdown alert to the peer. Whether the operation succeeds or not, the SSL_SENT_SHUTDOWN flag is set and a currently open session is considered closed and good and will be kept in the session cache for further reuse.

Note that SSL_shutdown() must not be called if a previous fatal error has occurred on a connection i.e. if SSL_get_error() has returned SSL_ERROR_SYSCALL or SSL_ERROR_SSL.

The shutdown procedure consists of two steps: sending of the close_notify shutdown alert, and reception of the peer's close_notify shutdown alert. The order of those two steps depends on the application.

It is acceptable for an application to only send its shutdown alert and then close the underlying connection without waiting for the peer's response. This way resources can be saved, as the process can already terminate or serve another connection. This should only be done when it is known that the other side will not send more data, otherwise there is a risk of a truncation attack.

When a client only writes and never reads from the connection, and the server has sent a session ticket to establish a session, the client might not be able to resume the session because it did not received and process the session ticket from the server. In case the application wants to be able to resume the session, it is recommended to do a complete shutdown procedure (bidirectional close_notify alerts).

When the underlying connection shall be used for more communications, the complete shutdown procedure must be performed, so that the peers stay synchronized.

SSL_shutdown() only closes the write direction. It is not possible to call SSL_write() after calling SSL_shutdown(). The read direction is closed by the peer.

The behaviour of SSL_shutdown() additionally depends on the underlying BIO. If the underlying BIO is blocking, SSL_shutdown() will only return once the handshake step has been finished or an error occurred.

If the underlying BIO is nonblocking, SSL_shutdown() will also return when the underlying BIO could not satisfy the needs of SSL_shutdown() to continue the handshake. In this case a call to SSL_get_error() with the return value of SSL_shutdown() will yield SSL_ERROR_WANT_READ or SSL_ERROR_WANT_WRITE. The calling process then must repeat the call after taking appropriate action to satisfy the needs of SSL_shutdown(). The action depends on the underlying BIO. When using a nonblocking socket, nothing is to be done, but select() can be used to check for the required condition. When using a buffering BIO, like a BIO pair, data must be written into or retrieved out of the BIO before being able to continue.

After SSL_shutdown() returned 0, it is possible to call SSL_shutdown() again to wait for the peer's close_notify alert. SSL_shutdown() will return 1 in that case. However, it is recommended to wait for it using SSL_read() instead.

SSL_shutdown() can be modified to only set the connection to "shutdown" state but not actually send the close_notify alert messages, see SSL_CTX_set_quiet_shutdown(3). When "quiet shutdown" is enabled, SSL_shutdown() will always succeed and return 1. Note that this is not standard compliant behaviour. It should only be done when the peer has a way to make sure all data has been received and doesn't wait for the close_notify alert message, otherwise an unexpected EOF will be reported.

There are implementations that do not send the required close_notify alert. If there is a need to communicate with such an implementation, and it's clear that all data has been received, do not wait for the peer's close_notify alert. Waiting for the close_notify alert when the peer just closes the connection will result in an error being generated. The error can be ignored using the SSL_OP_IGNORE_UNEXPECTED_EOF. For more information see SSL_CTX_set_options(3).

First to close the connection

When the application is the first party to send the close_notify alert, SSL_shutdown() will only send the alert and then set the SSL_SENT_SHUTDOWN flag (so that the session is considered good and will be kept in the cache). If successful, SSL_shutdown() will return 0.

If a unidirectional shutdown is enough (the underlying connection shall be closed anyway), this first successful call to SSL_shutdown() is sufficient.

In order to complete the bidirectional shutdown handshake, the peer needs to send back a close_notify alert. The SSL_RECEIVED_SHUTDOWN flag will be set after receiving and processing it.

The peer is still allowed to send data after receiving the close_notify event. When it is done sending data, it will send the close_notify alert. SSL_read() should be called until all data is received. SSL_read() will indicate the end of the peer data by returning <= 0 and SSL_get_error() returning SSL_ERROR_ZERO_RETURN.

Peer closes the connection

If the peer already sent the close_notify alert and it was already processed implicitly inside another function (SSL_read(3)), the SSL_RECEIVED_SHUTDOWN flag is set. SSL_read() will return <= 0 in that case, and SSL_get_error() will return SSL_ERROR_ZERO_RETURN. SSL_shutdown() will send the close_notify alert, set the SSL_SENT_SHUTDOWN flag. If successful, SSL_shutdown() will return 1.

Whether SSL_RECEIVED_SHUTDOWN is already set can be checked using the SSL_get_shutdown() (see also SSL_set_shutdown(3) call.

但是这个完全取决于模型,我发现似乎prune的模型不再认识名人的脸了。

但是这个完全取决于模型,我发现似乎prune的模型不再认识名人的脸了。

而在img2img里选择这个

而在img2img里选择这个 制作了一个简单的

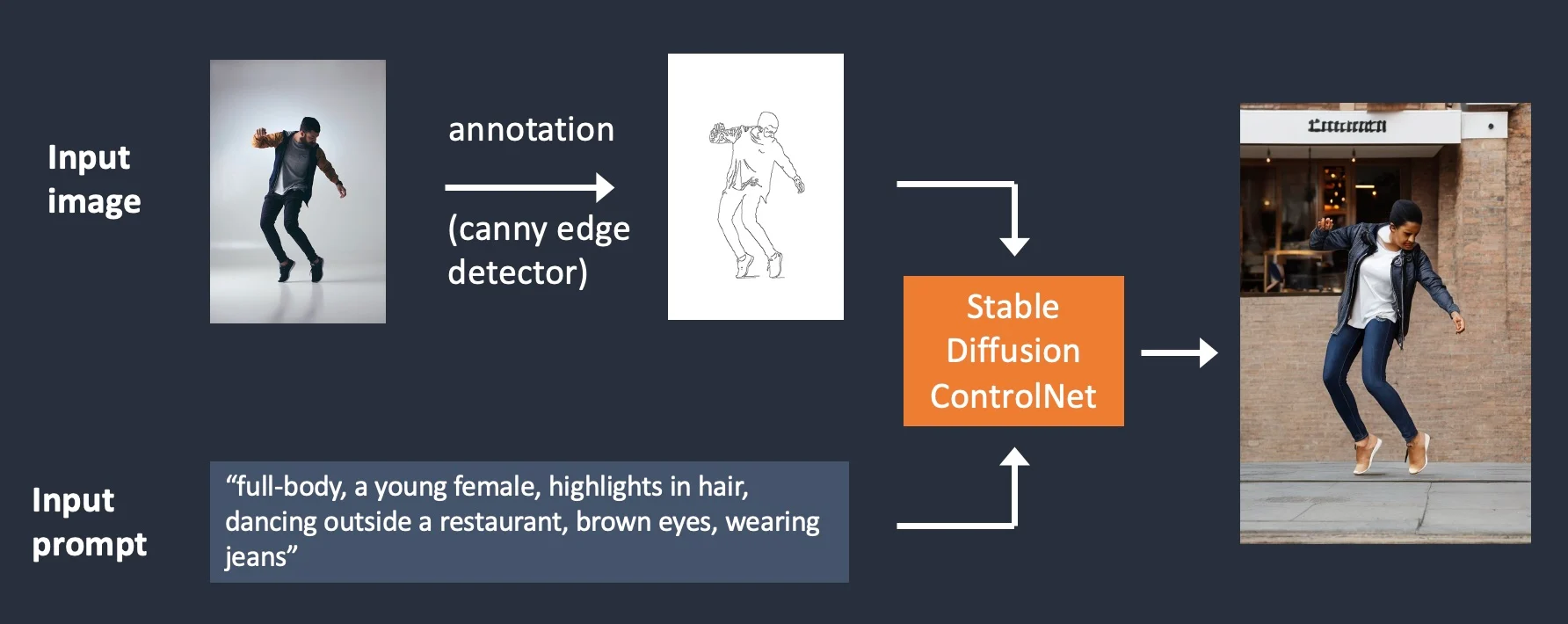

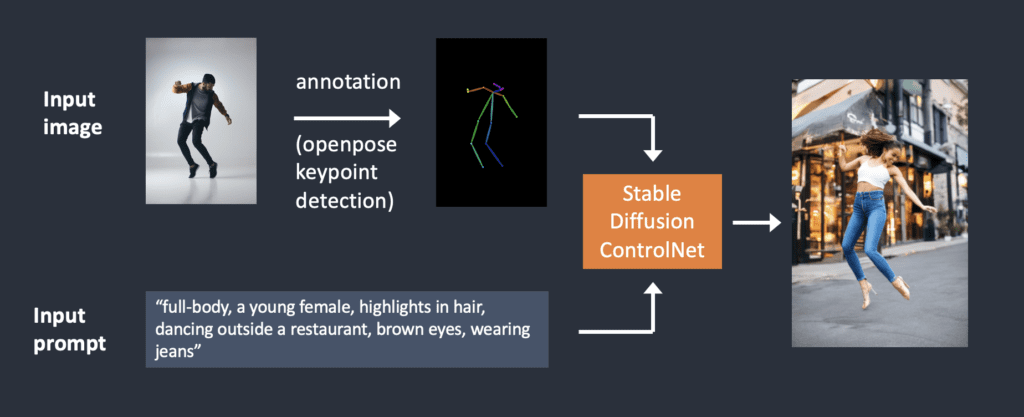

制作了一个简单的 能不能说controlNet需要

能不能说controlNet需要

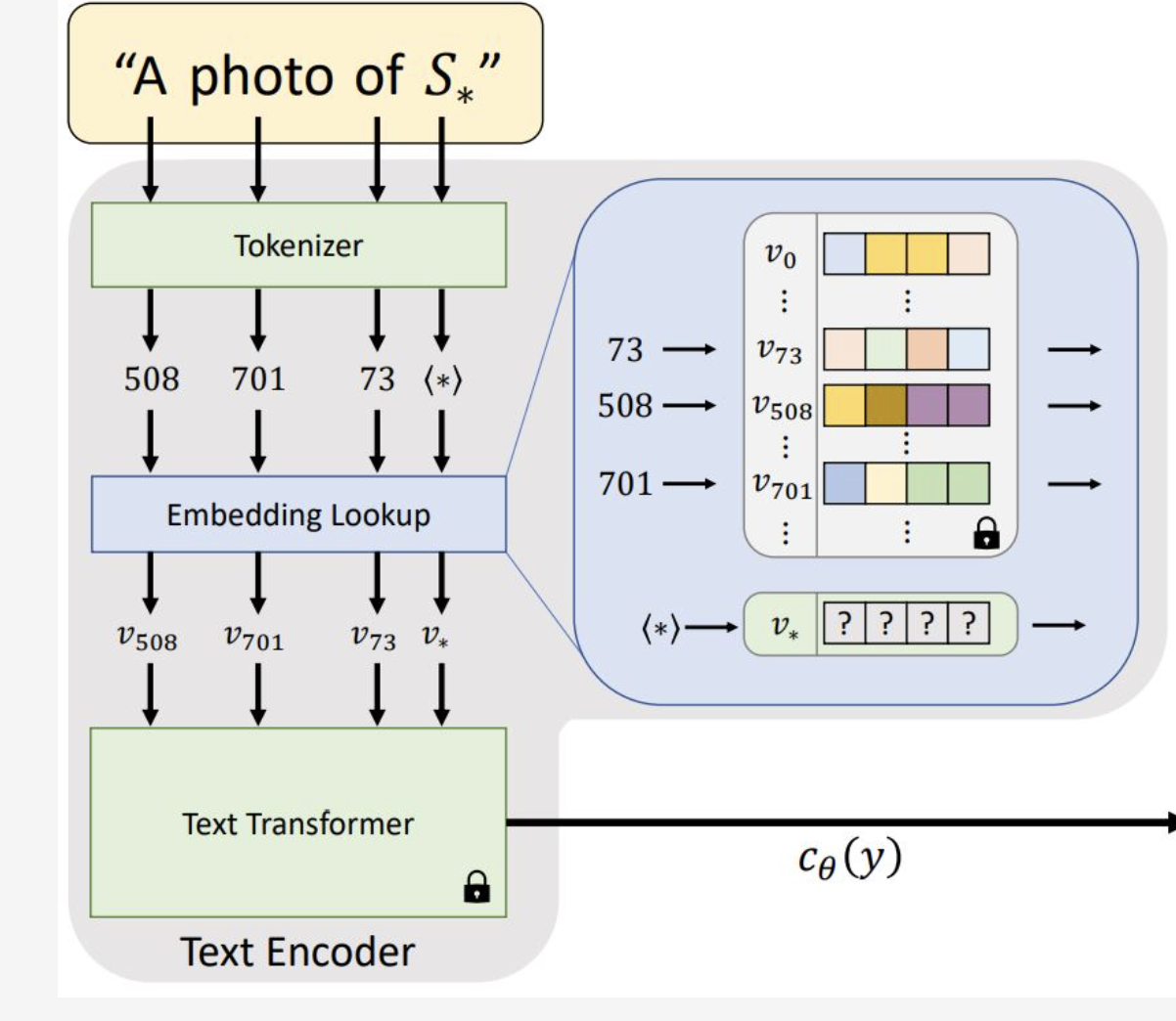

这里是一个从图像到语言,又从语言到图像的过程。又一次让我想起了:

这里是一个从图像到语言,又从语言到图像的过程。又一次让我想起了: 然后运行过程中发现这个动作一直不前进

然后运行过程中发现这个动作一直不前进

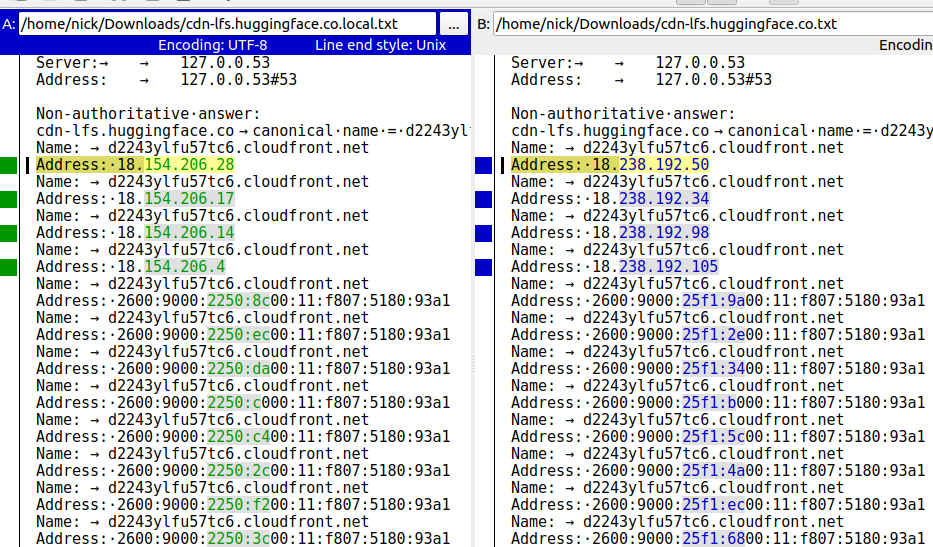

为什么会造成这个区别呢?是地域不同所以自然的就不同吗?

为什么会造成这个区别呢?是地域不同所以自然的就不同吗?

而

而

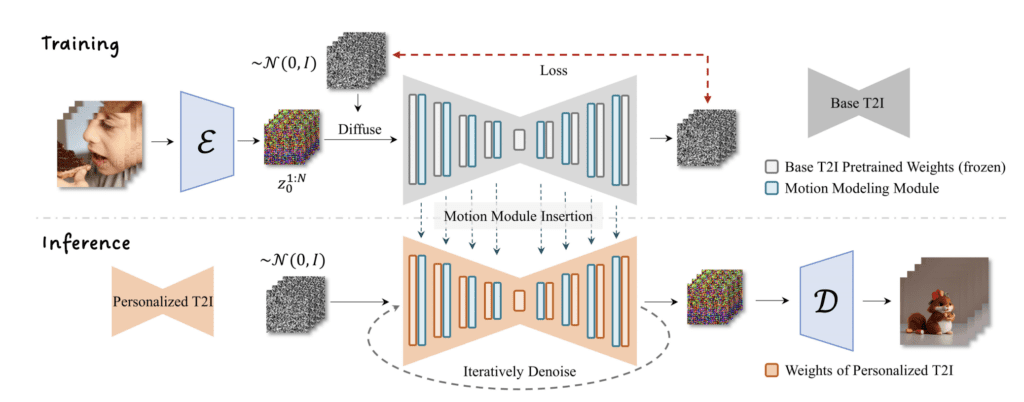

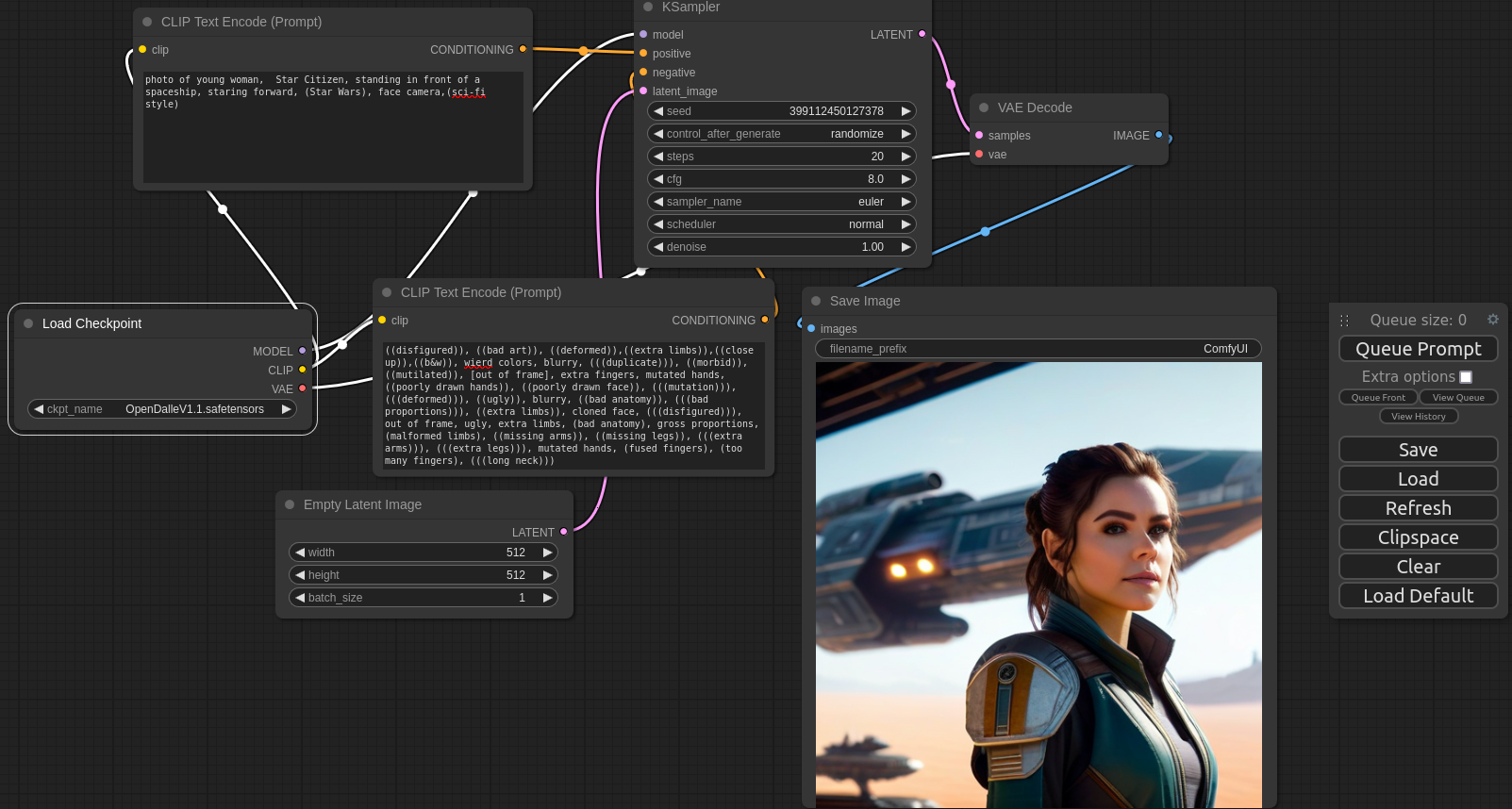

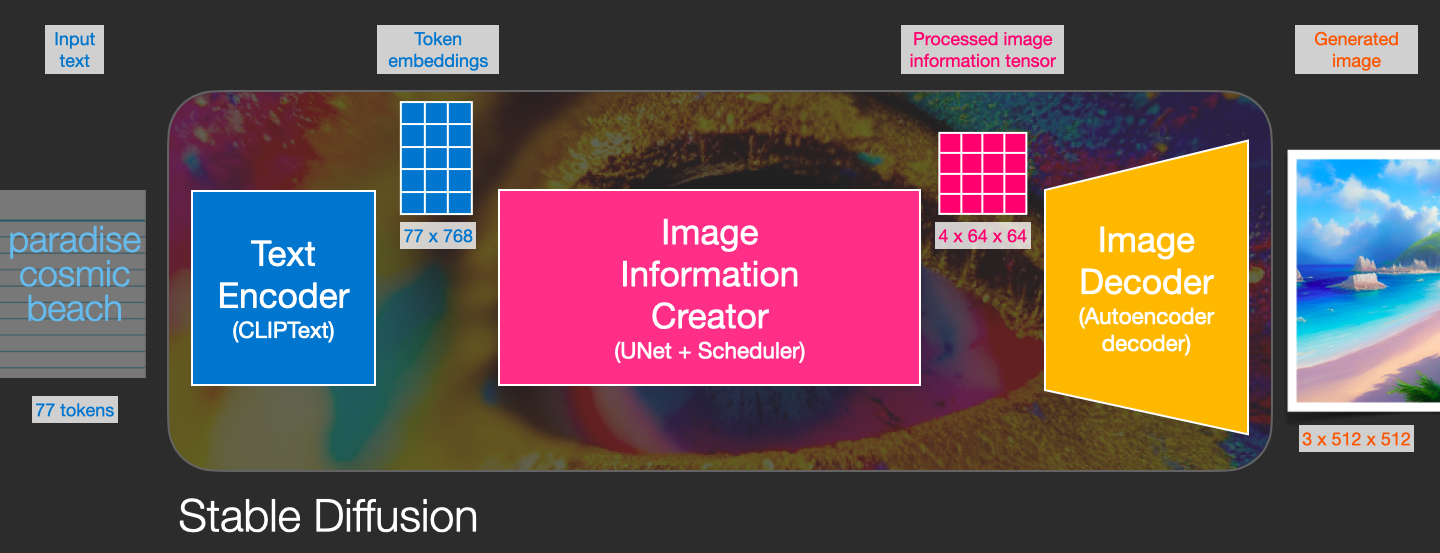

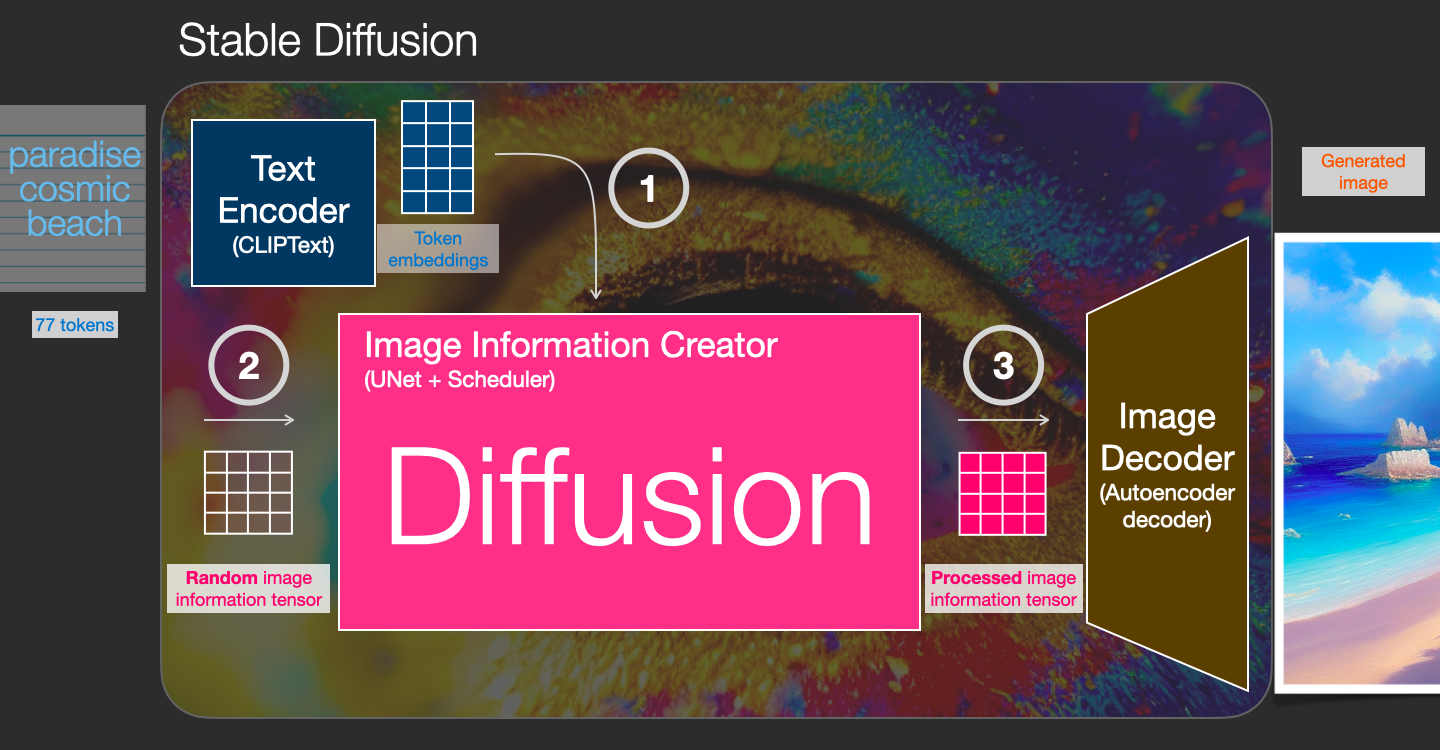

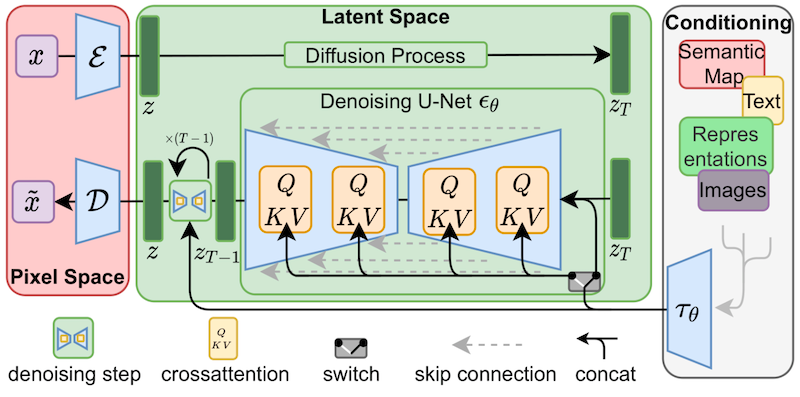

这个流程似乎是很多文章里描述的,但是我依旧不理解。这篇

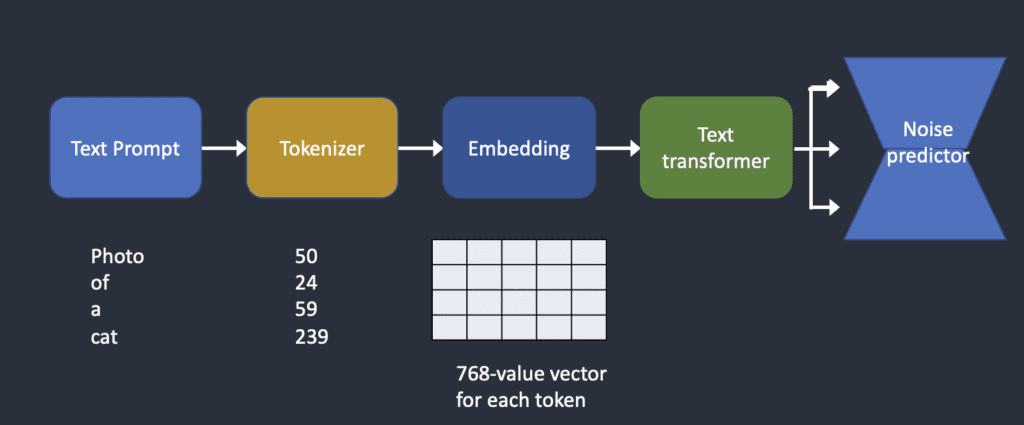

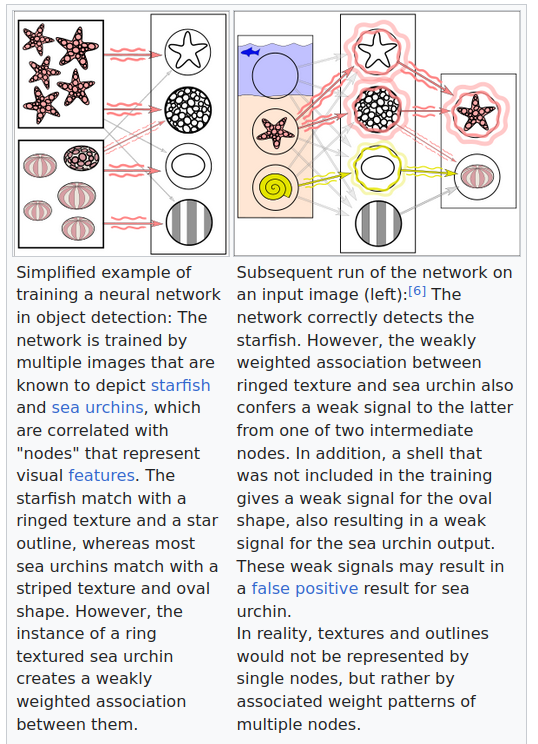

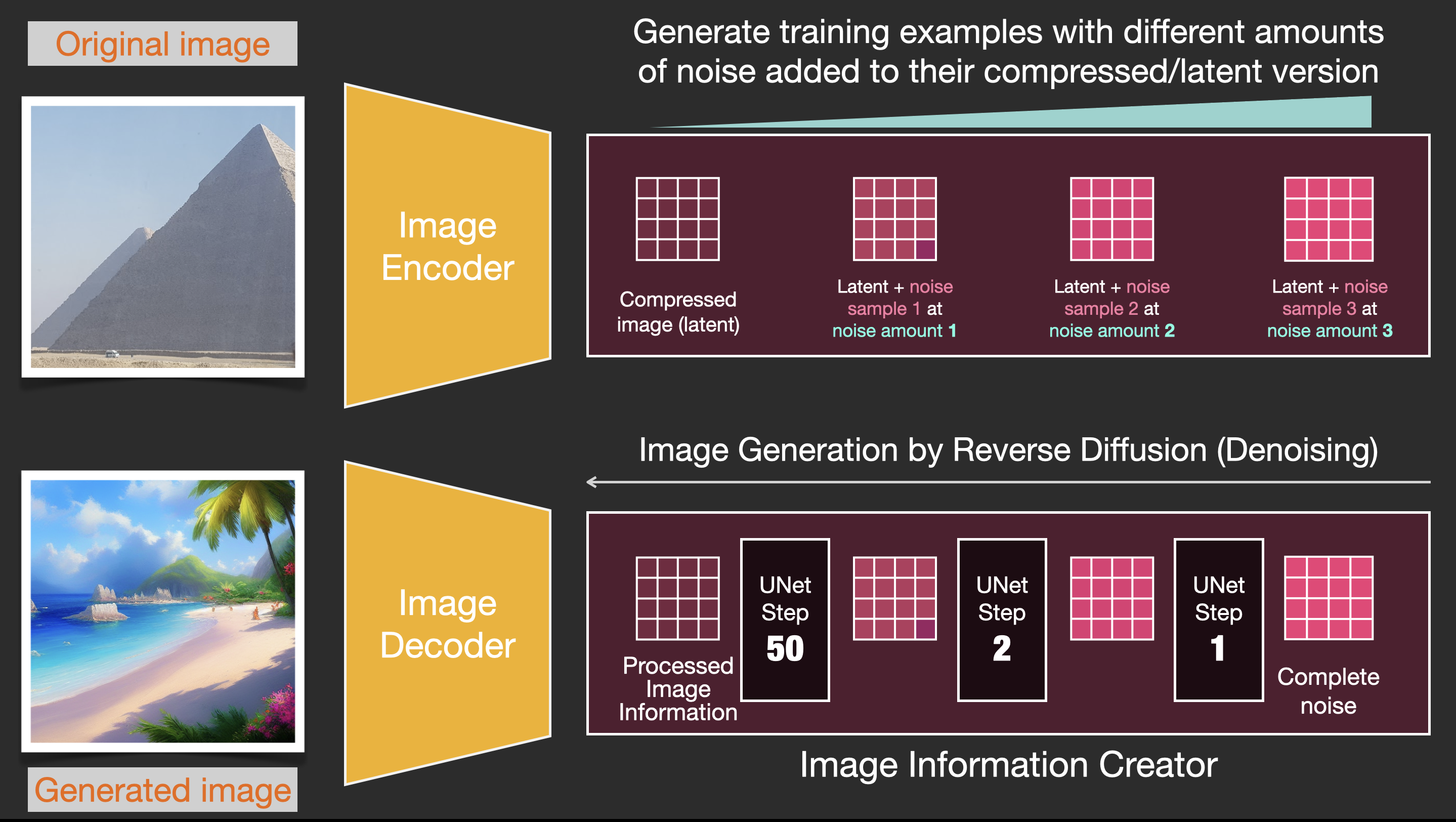

这个流程似乎是很多文章里描述的,但是我依旧不理解。这篇 这幅图是很容易理解,但是什么叫做

这幅图是很容易理解,但是什么叫做

可以搞笑的回答,diffusion就像是在炒菜,把一堆输入的文字配合一系列的调味品炒出一锅菜。这个当然是向大妈大爷们解释的方式了。

可以搞笑的回答,diffusion就像是在炒菜,把一堆输入的文字配合一系列的调味品炒出一锅菜。这个当然是向大妈大爷们解释的方式了。

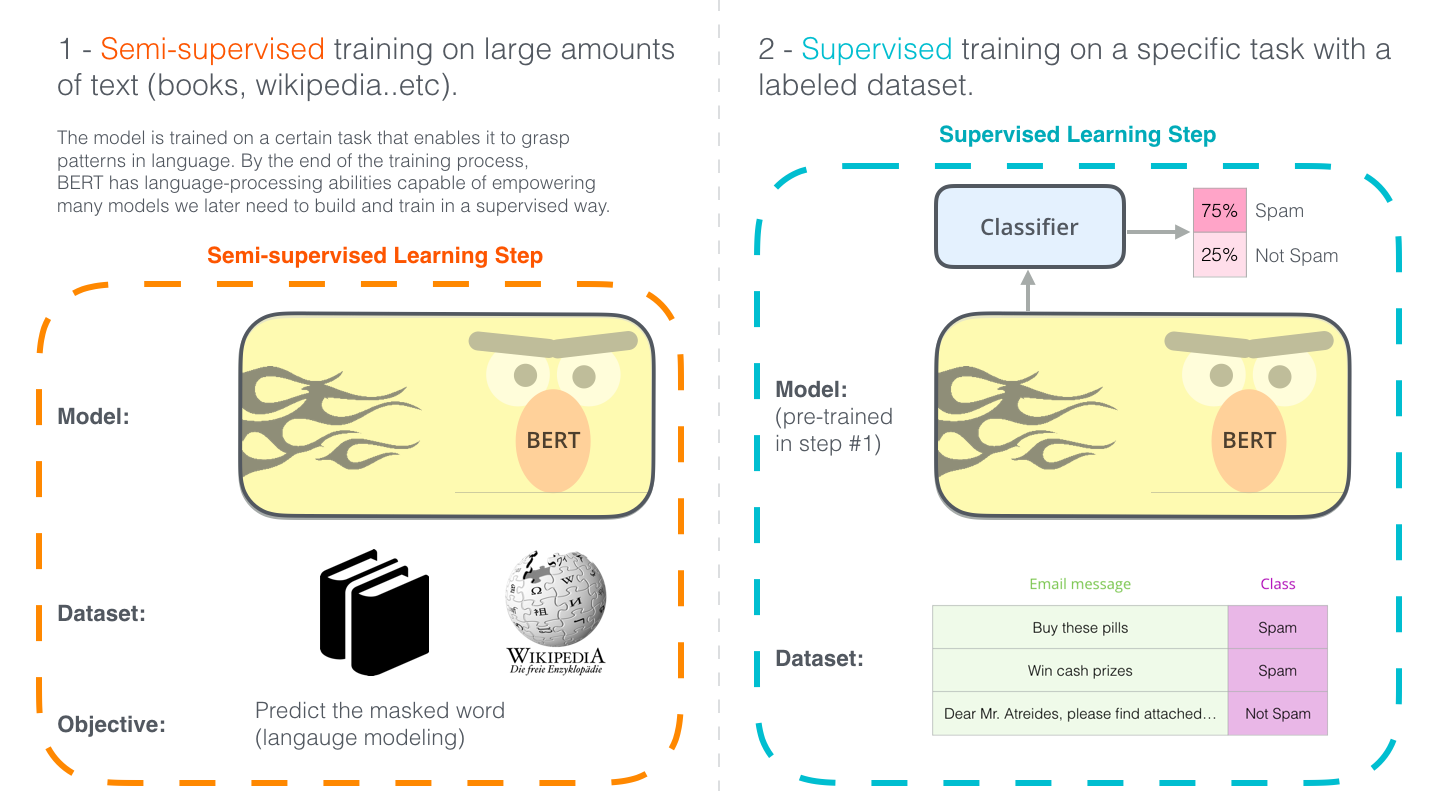

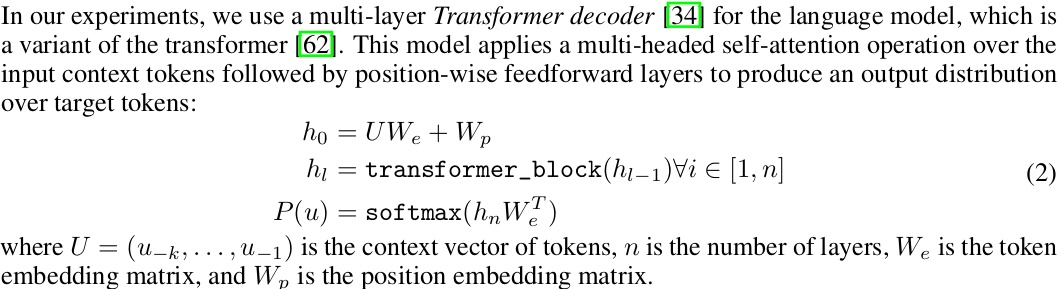

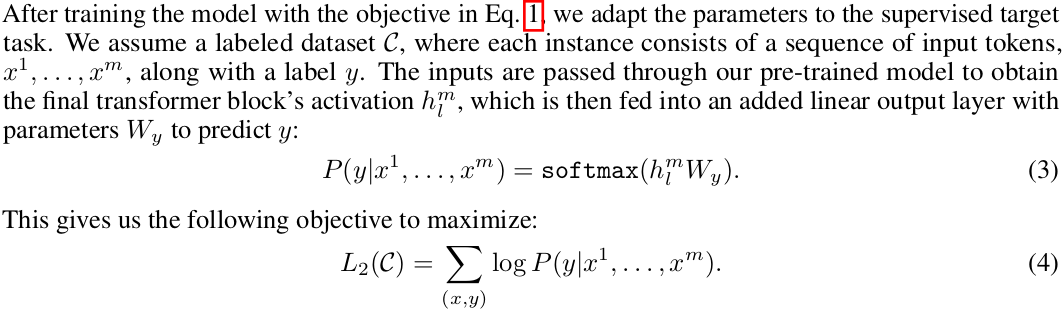

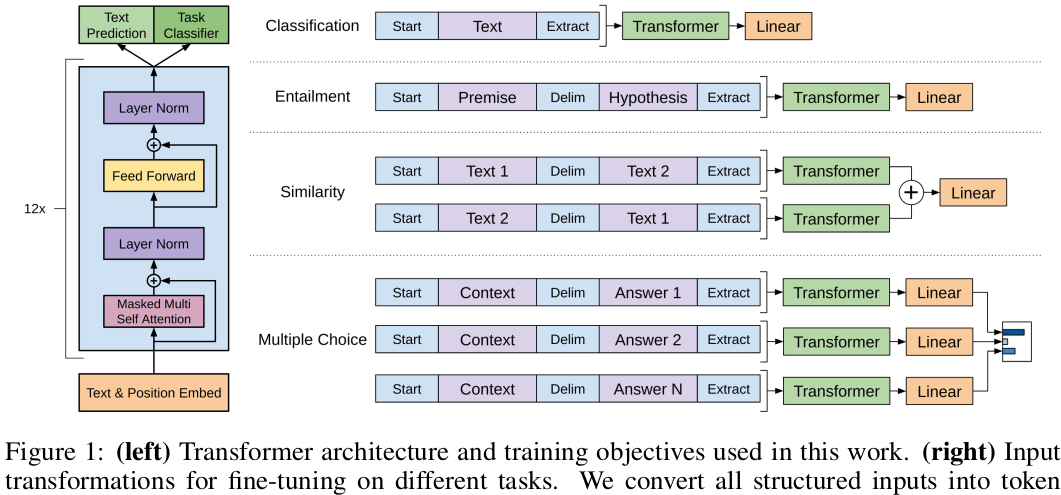

这个是训练的两个步骤:半监督学习和监督学习过程。使用wiki作为学习资料看样子是非常有意义的,因为这个可以说是最最完整规范的文字图片材料集散地。视频和图像就不大有类似,也许Youtube?

这个是训练的两个步骤:半监督学习和监督学习过程。使用wiki作为学习资料看样子是非常有意义的,因为这个可以说是最最完整规范的文字图片材料集散地。视频和图像就不大有类似,也许Youtube?

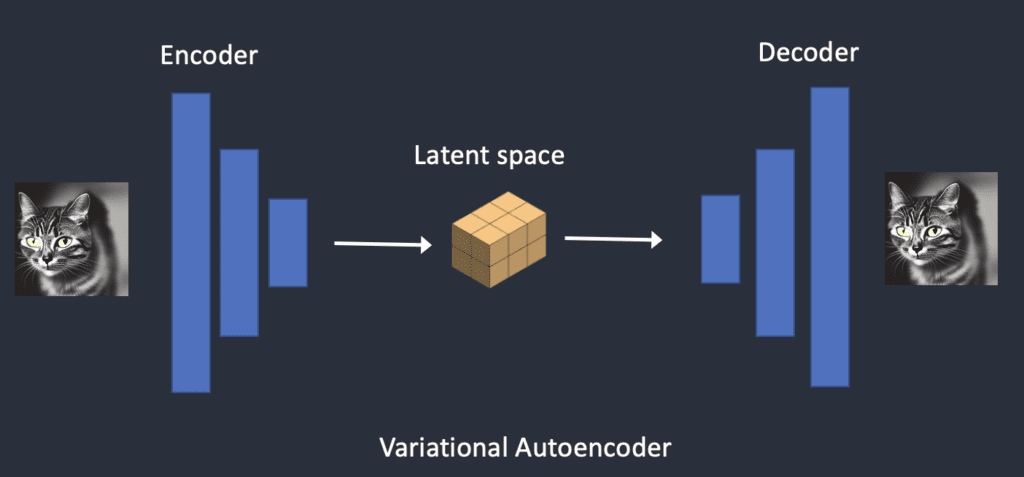

这里的核心自然是latent space,它是一个什么样子的空间?

这里的核心自然是latent space,它是一个什么样子的空间?

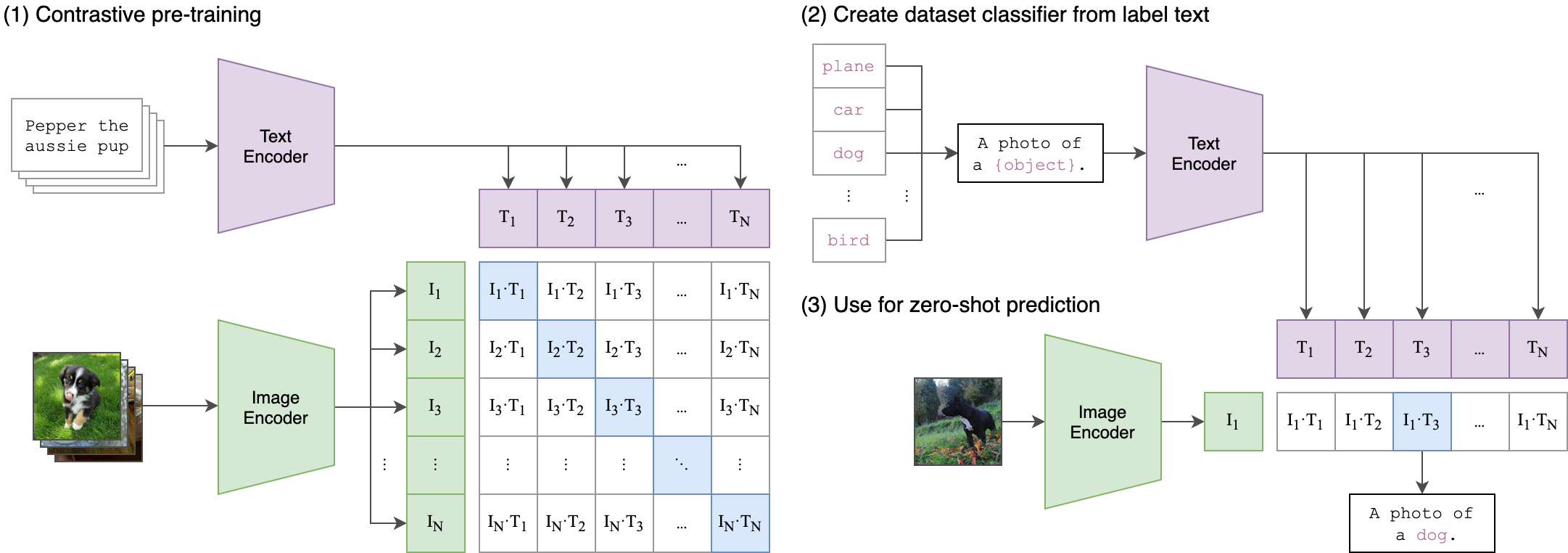

这幅示意图是写实的吗?文字和图片的向量似乎是组合成了一个矩阵,难道要选择所有的组合对子?但是我上面的理解是每一个token都是一个embedding,如果理解不错的话这个是多少个token就是多少个向量要怎么。。。

至少我学习了CLIP的缩写,这个也许是唯一理解的东西。

这幅示意图是写实的吗?文字和图片的向量似乎是组合成了一个矩阵,难道要选择所有的组合对子?但是我上面的理解是每一个token都是一个embedding,如果理解不错的话这个是多少个token就是多少个向量要怎么。。。

至少我学习了CLIP的缩写,这个也许是唯一理解的东西。

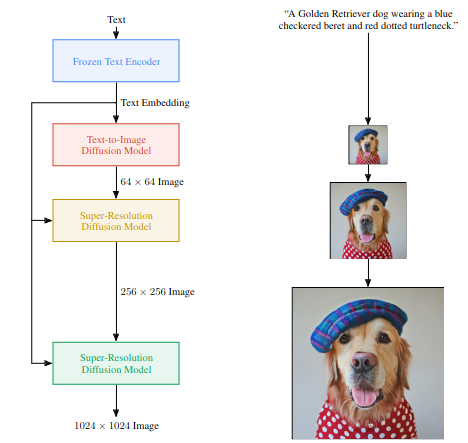

虽然是借鸡生蛋,但是很有独创性,而且我对于它的drawbench很感兴趣。

虽然是借鸡生蛋,但是很有独创性,而且我对于它的drawbench很感兴趣。

应该说卡通化可以掩盖大部分的缺陷,这个也许是目前的一个解决办法。

应该说卡通化可以掩盖大部分的缺陷,这个也许是目前的一个解决办法。

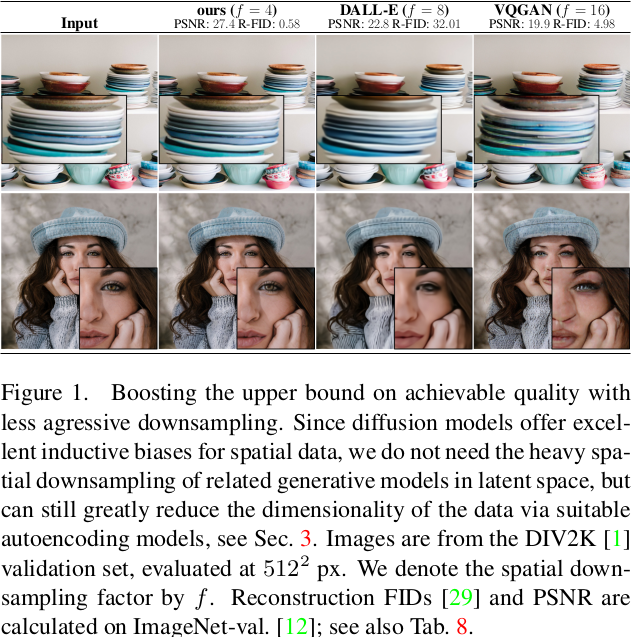

这幅图是从这篇

这幅图是从这篇 压缩效果的比较是硬碰硬的指标,不论你的算法有多么高明,压缩率有多高,但是一旦你的还原质量不够,那几乎就立刻被判了死刑了。

压缩效果的比较是硬碰硬的指标,不论你的算法有多么高明,压缩率有多高,但是一旦你的还原质量不够,那几乎就立刻被判了死刑了。

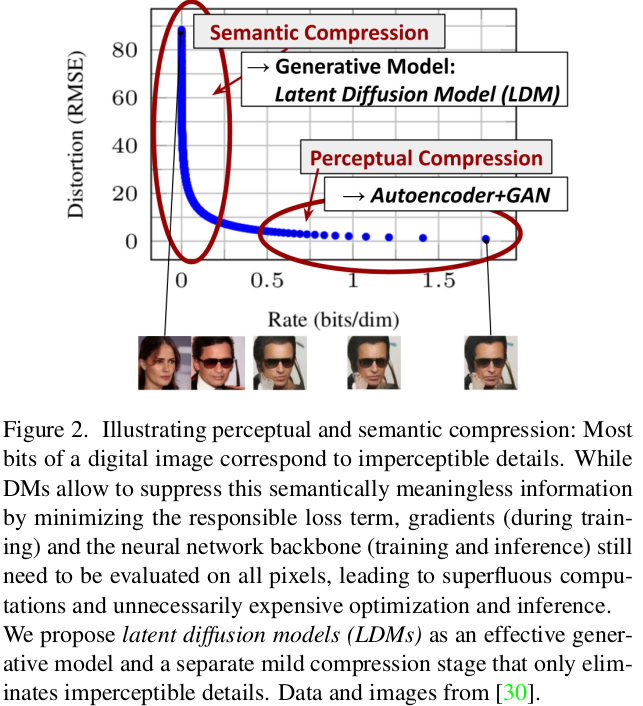

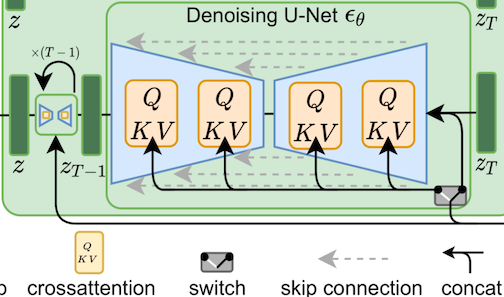

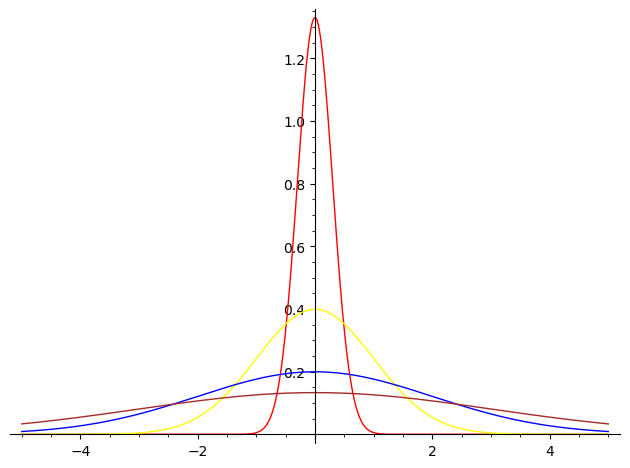

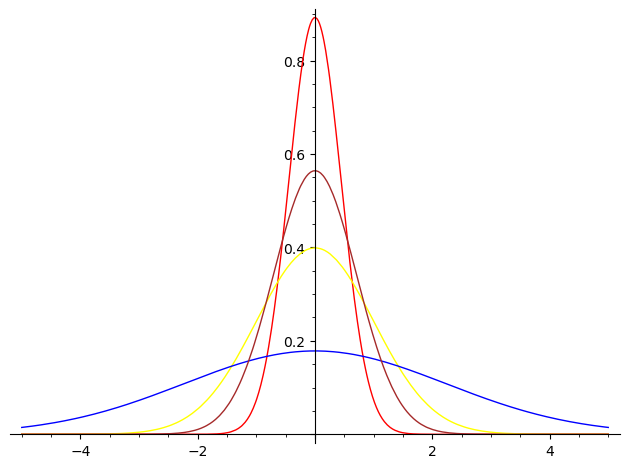

这幅图是阅读理解的关键,而其中的含义是非常深刻的,我至今还是不能完全掌握。这里要先补课学习

这幅图是阅读理解的关键,而其中的含义是非常深刻的,我至今还是不能完全掌握。这里要先补课学习 如果自然界的确能够被细分为一个个所谓的feature或者说特征,那么识别特征就是识别对象的简单组合。而神经网络基于光通量来过滤所谓的杂音之后的坚

强的连接自然而然的成为下一次识别或者说还原的快车道。自然往往选择最简单可靠的路径,人们总结为奥卡姆剃刀原则,因为精锐所以锋利,从哪里来就回哪里

去:From light to darkness; From darkness,

light!既然光信号经过损耗杂音干扰后存留的信号肯定是本征信号,那么反复出现的就必然是本征信号。我们需要解决的往往是怎么最大限度的模拟信号的损

失而又能够尽可能的还原,这里说是还原还不如说是经过检验可以接受就算成功。人类的反馈外界客观世界的唯一标准就是有用,画画像不像是由谁决定的?是通过

比较来决定的,这个图灵实验是一个原理,没有什么数学方法来定义这个复制是否可信,只能用使用者能否发现区别来作为检验的唯一标准:实践是检验真理的唯一

标准,人如果是唯一的实践者,人就是检验的唯一标准。如果学习的机器是唯一的实践者,那么让机器来做机器的检验者吧。

如果自然界的确能够被细分为一个个所谓的feature或者说特征,那么识别特征就是识别对象的简单组合。而神经网络基于光通量来过滤所谓的杂音之后的坚

强的连接自然而然的成为下一次识别或者说还原的快车道。自然往往选择最简单可靠的路径,人们总结为奥卡姆剃刀原则,因为精锐所以锋利,从哪里来就回哪里

去:From light to darkness; From darkness,

light!既然光信号经过损耗杂音干扰后存留的信号肯定是本征信号,那么反复出现的就必然是本征信号。我们需要解决的往往是怎么最大限度的模拟信号的损

失而又能够尽可能的还原,这里说是还原还不如说是经过检验可以接受就算成功。人类的反馈外界客观世界的唯一标准就是有用,画画像不像是由谁决定的?是通过

比较来决定的,这个图灵实验是一个原理,没有什么数学方法来定义这个复制是否可信,只能用使用者能否发现区别来作为检验的唯一标准:实践是检验真理的唯一

标准,人如果是唯一的实践者,人就是检验的唯一标准。如果学习的机器是唯一的实践者,那么让机器来做机器的检验者吧。

我

以为这个对于客观复杂世界作出机械刻板一比一复刻的思路是过于天真的,因为现实是资源永远是有限的宝贵的,而自然界的信息几乎是无穷的,以有限的处理能力

硬抗无限的输入信息是徒劳的。人类智能进化史就是压缩还原的不断自我平衡,去粗取精,去伪存真的真正目的是自然处理能力的不足。同样的,现在人类发明的种

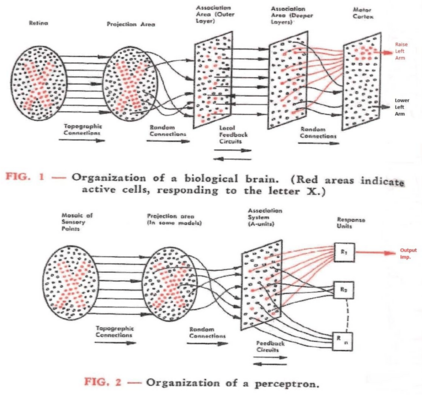

种算法也是现实的计算能力与客观处理的要求的之间的矛盾的某种妥协产物,如果有一天在虚拟现实里实现真正的Perceptron我也不会感到惊奇,但是不

是现在。

我

以为这个对于客观复杂世界作出机械刻板一比一复刻的思路是过于天真的,因为现实是资源永远是有限的宝贵的,而自然界的信息几乎是无穷的,以有限的处理能力

硬抗无限的输入信息是徒劳的。人类智能进化史就是压缩还原的不断自我平衡,去粗取精,去伪存真的真正目的是自然处理能力的不足。同样的,现在人类发明的种

种算法也是现实的计算能力与客观处理的要求的之间的矛盾的某种妥协产物,如果有一天在虚拟现实里实现真正的Perceptron我也不会感到惊奇,但是不

是现在。

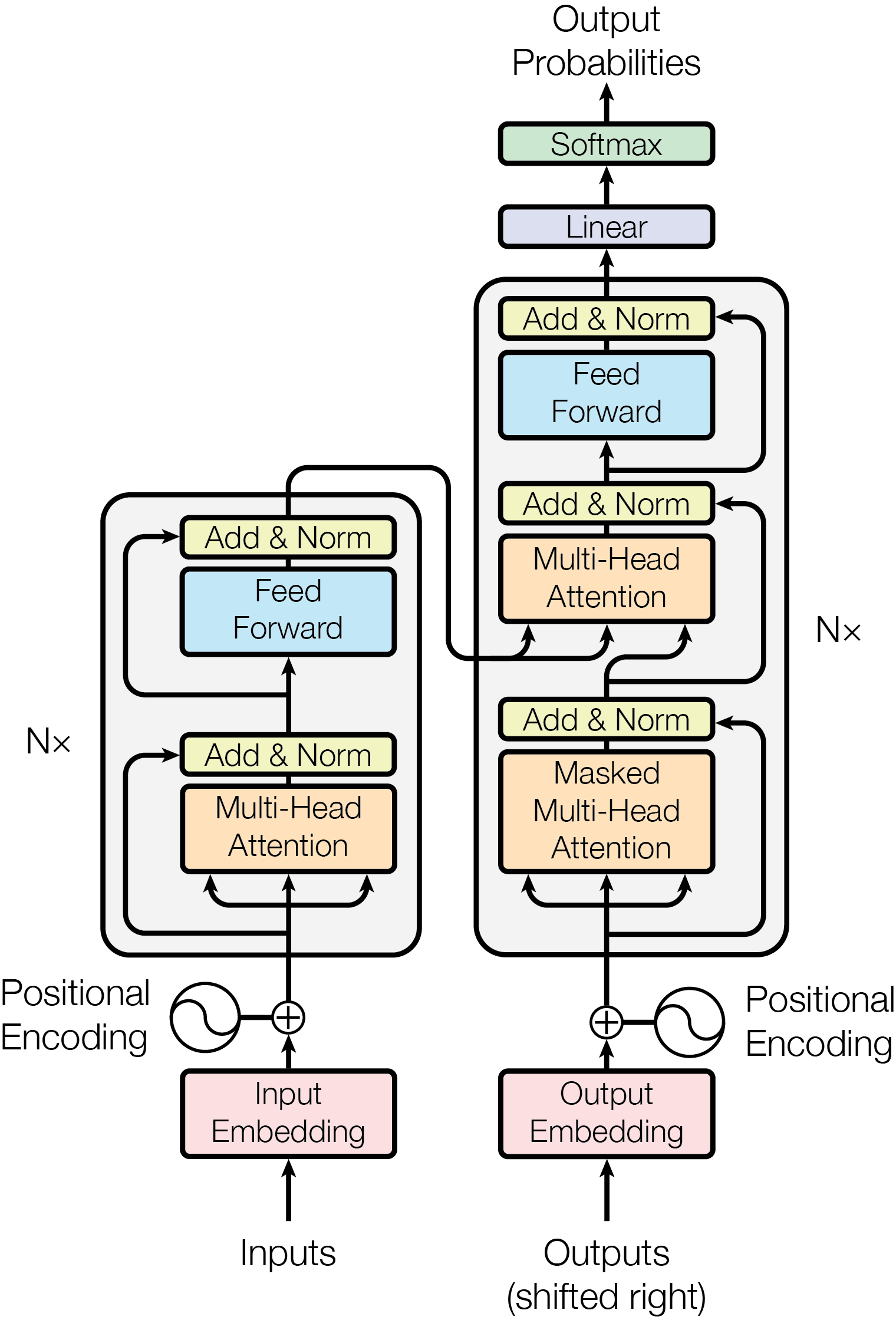

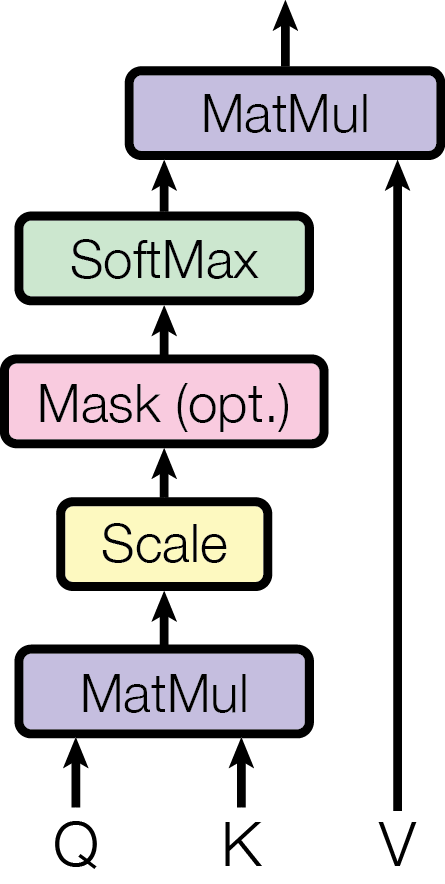

真正的跨越是并行计算,而这其中的道理却又出奇的简单,看起来伟大的跳跃都只不过是平时散步的时候把脚尖颠起来走一走而已。就是说原本是一个个向量的计算,现在换成了矩阵,那么可以利用计算机的矩阵计算优化一个个单独的向量计算,尤其是高维度的矩阵。

真正的跨越是并行计算,而这其中的道理却又出奇的简单,看起来伟大的跳跃都只不过是平时散步的时候把脚尖颠起来走一走而已。就是说原本是一个个向量的计算,现在换成了矩阵,那么可以利用计算机的矩阵计算优化一个个单独的向量计算,尤其是高维度的矩阵。

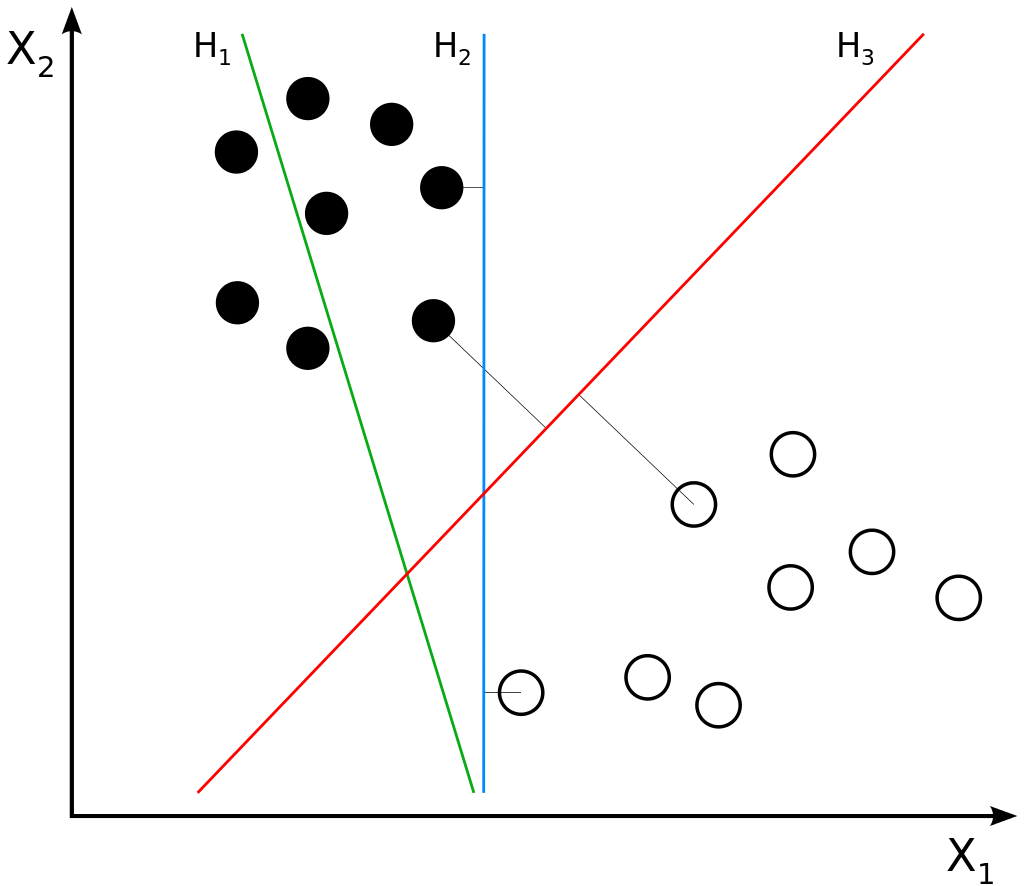

H1 does not separate the classes. H2 does, but only with a small margin. H3 separates them with the maximal margin.

H1 does not separate the classes. H2 does, but only with a small margin. H3 separates them with the maximal margin.

RNN能够识别出woman throwing frisbee,我使用Interrogate CLIP得到:

RNN能够识别出woman throwing frisbee,我使用Interrogate CLIP得到:

因为这个目的就是防止机器行为,这个应该是简单的OCR程序难以胜任的。即便训练可能也不行吧?

因为这个目的就是防止机器行为,这个应该是简单的OCR程序难以胜任的。即便训练可能也不行吧?

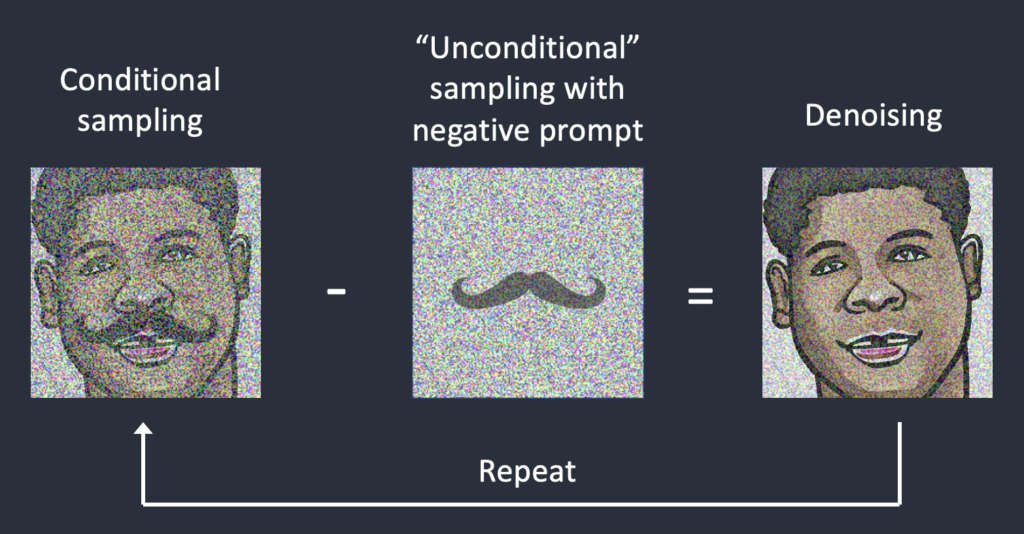

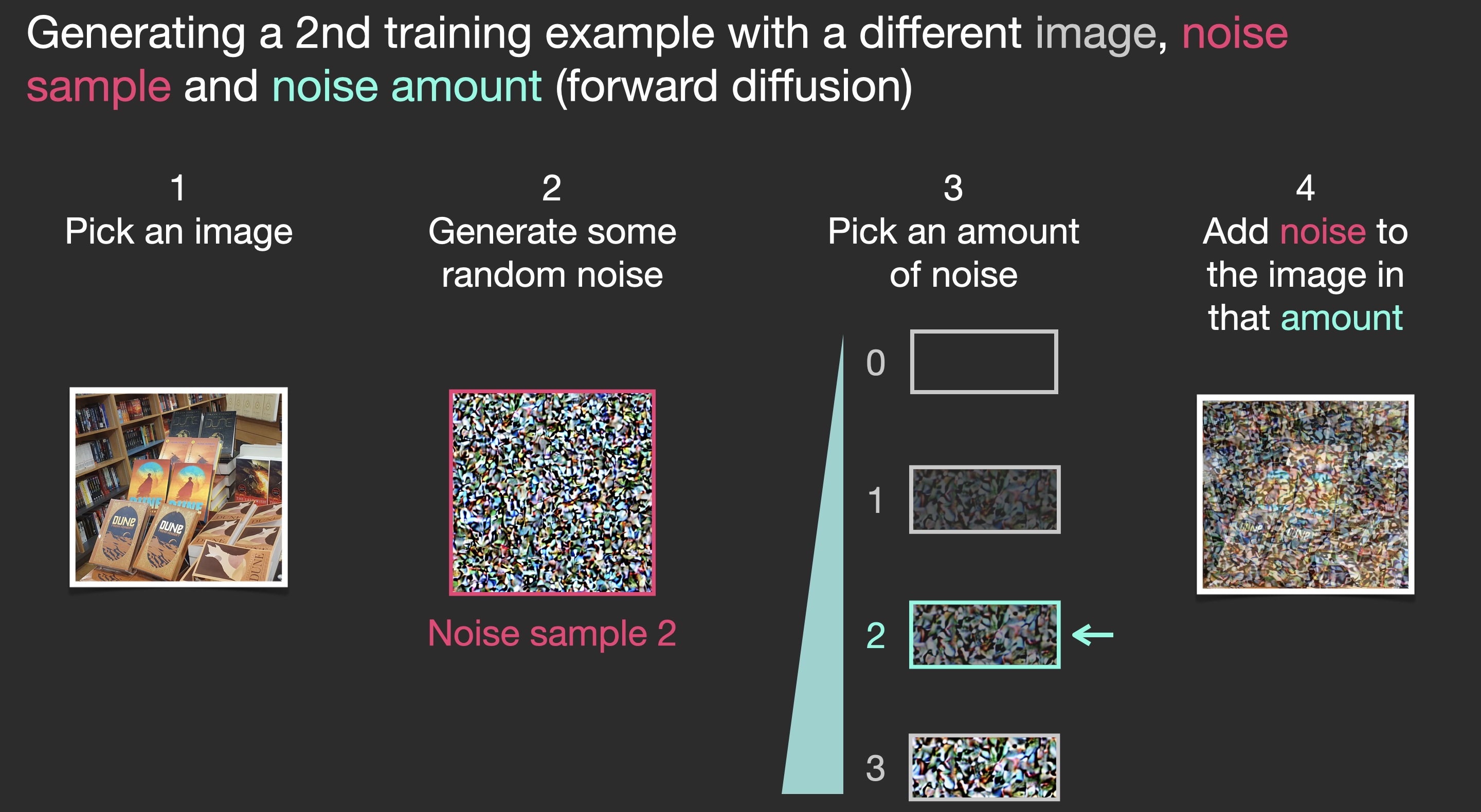

就是给原始图片依次添加噪音这个过程在我看来是模仿信号传输过程的衰减与人类记忆存储里的自然存储失效的随机过程。

就是给原始图片依次添加噪音这个过程在我看来是模仿信号传输过程的衰减与人类记忆存储里的自然存储失效的随机过程。

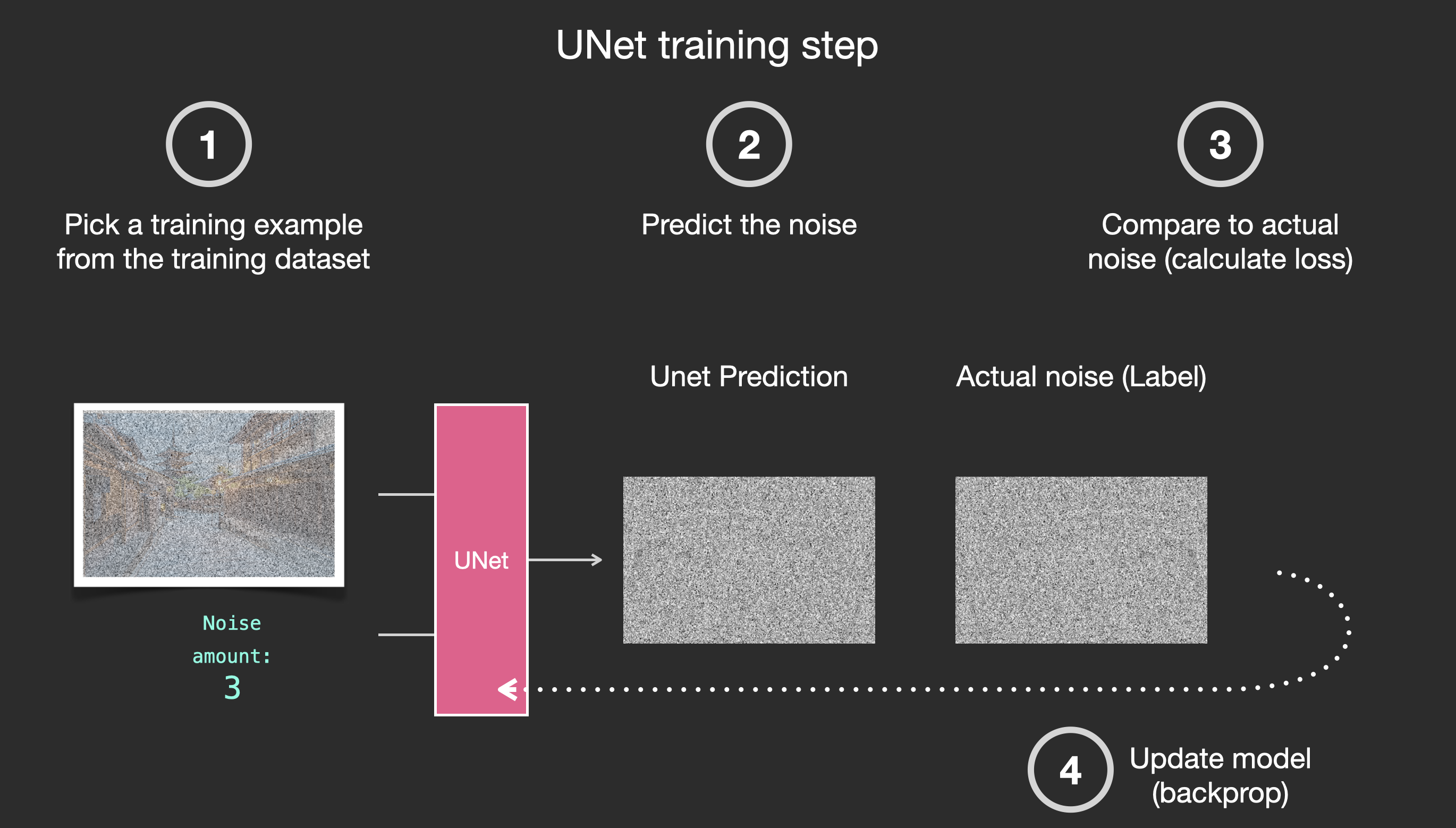

这里是把事先添加的噪音量作为预测的对象,相当于训练一个

这里是把事先添加的噪音量作为预测的对象,相当于训练一个 这幅图是怎么和这个反复看到的

这幅图是怎么和这个反复看到的 因为其他部分都是普通人都能理解的原理部分,而所有的改进与数学算法就在这个核心部分,而恰恰是这个核心部分看不懂!论文里的数学部分太多了,而这个仅仅是一个部分,更主要的是训练是一个逆过程,

因为其他部分都是普通人都能理解的原理部分,而所有的改进与数学算法就在这个核心部分,而恰恰是这个核心部分看不懂!论文里的数学部分太多了,而这个仅仅是一个部分,更主要的是训练是一个逆过程, 我先把定义抄了一遍,文字部分还比较清楚,但是这个

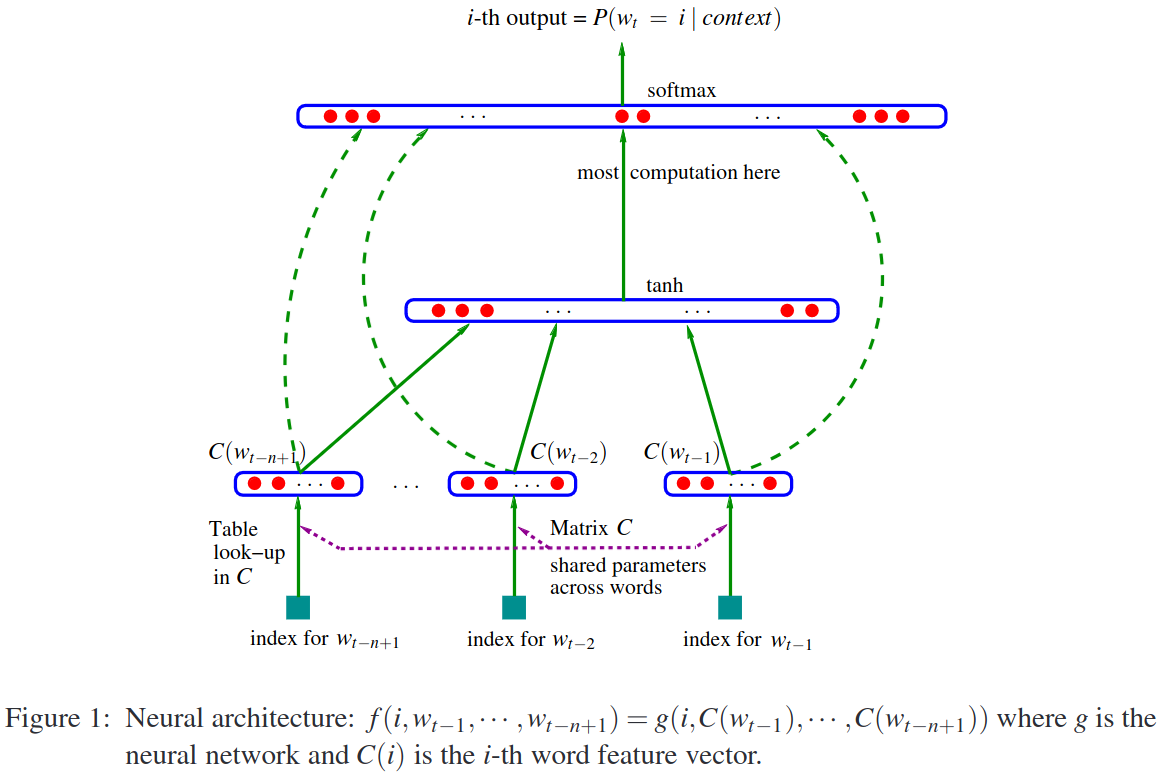

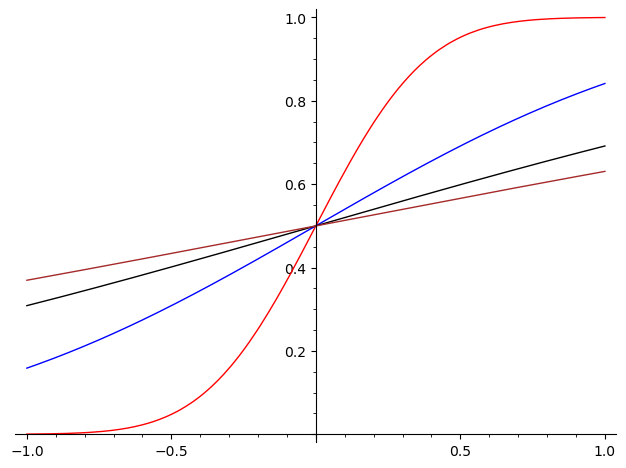

我先把定义抄了一遍,文字部分还比较清楚,但是这个 针对课后练习题要求解任意的点拟合曲线,我一开始就糊涂了,我还以为这个Maclaurin Series式的公式能够任意的求解,后来看了

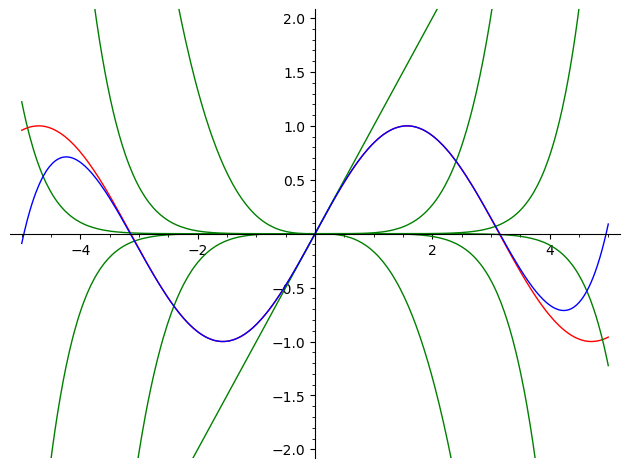

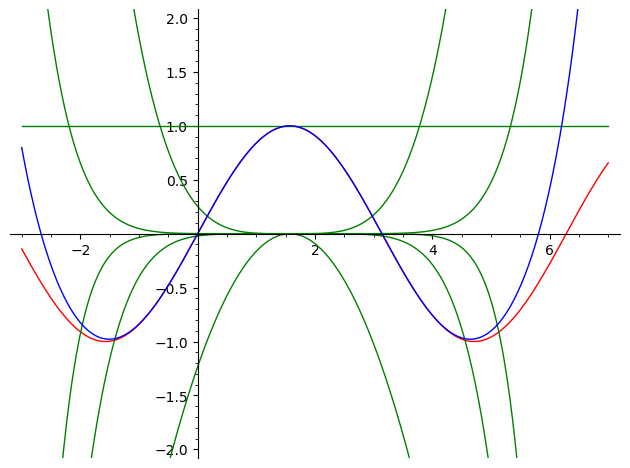

针对课后练习题要求解任意的点拟合曲线,我一开始就糊涂了,我还以为这个Maclaurin Series式的公式能够任意的求解,后来看了 注意红色和蓝色曲线在x=π/2的周围拟合度非常的高。泰勒级数真的是诚不我欺也!我似乎是第一次对于这种微积分的逼近思想有了信服的认识,泰勒多项式虽然是一种近似,但是泰勒级数却是数学上的严格的相等,因为无穷大的逼近就是真的相等而不是有限项的近似。

注意红色和蓝色曲线在x=π/2的周围拟合度非常的高。泰勒级数真的是诚不我欺也!我似乎是第一次对于这种微积分的逼近思想有了信服的认识,泰勒多项式虽然是一种近似,但是泰勒级数却是数学上的严格的相等,因为无穷大的逼近就是真的相等而不是有限项的近似。

可是这里有些细节还不明白,比如这个所谓的final transformer block's activation 究竟是何方神圣?而那个神秘的

可是这里有些细节还不明白,比如这个所谓的final transformer block's activation 究竟是何方神圣?而那个神秘的 也许原理上有一个概念,但是作者并没有回答额外的训练参数Wy是怎么来的。作者的解说让我感到有些不可思议,难道人工智能就是这样子实现的吗?虽说熟读唐诗三百首不会作诗也会吟是一种通用的人类学习方法,但是这个真的只能模仿啊。比如

也许原理上有一个概念,但是作者并没有回答额外的训练参数Wy是怎么来的。作者的解说让我感到有些不可思议,难道人工智能就是这样子实现的吗?虽说熟读唐诗三百首不会作诗也会吟是一种通用的人类学习方法,但是这个真的只能模仿啊。比如

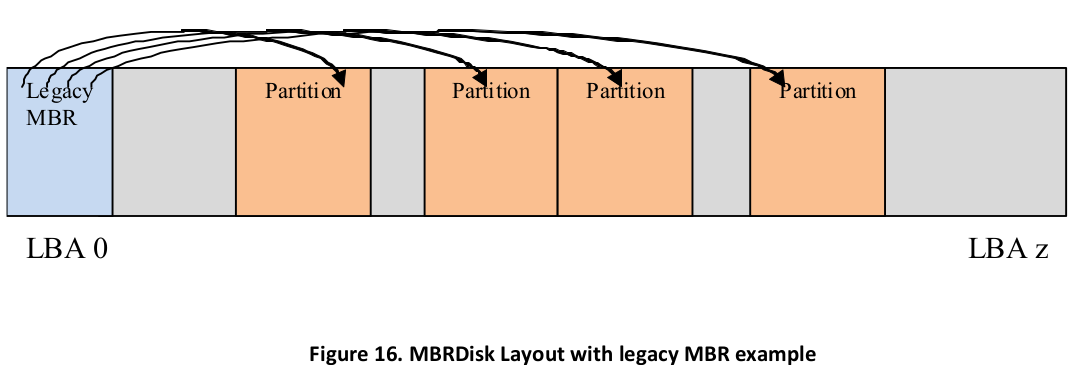

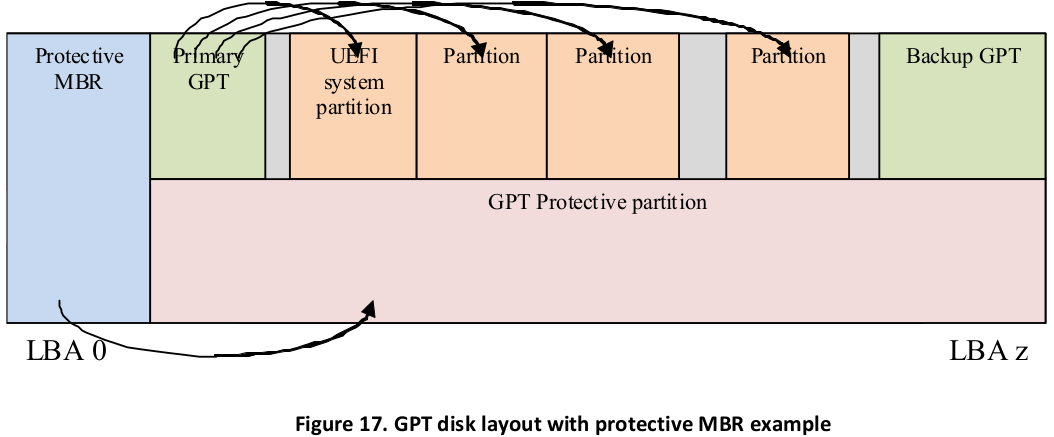

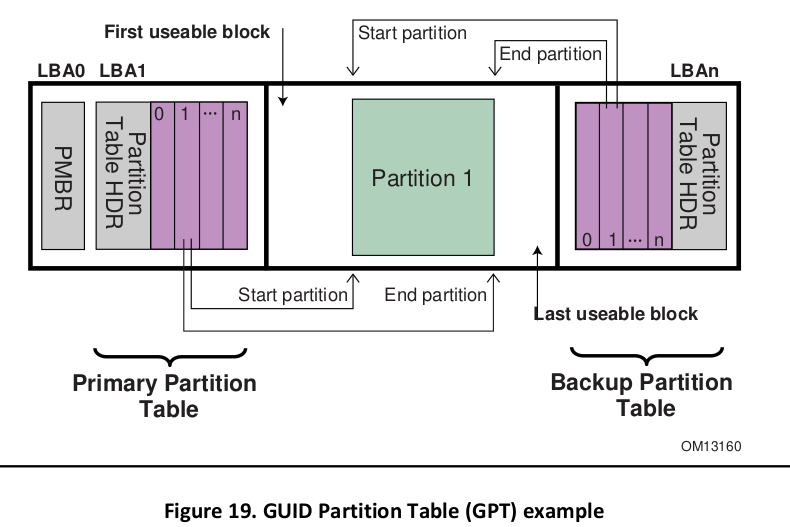

关于GPT partition table其实也就是比较容易理解了,GPT表确实有点大,因为单单一个partition entry array就至少要16k,就是32个LBA(assume 512byte/LBA)

关于GPT partition table其实也就是比较容易理解了,GPT表确实有点大,因为单单一个partition entry array就至少要16k,就是32个LBA(assume 512byte/LBA)

所以,这里的34就明白怎么来的吧?

所以,这里的34就明白怎么来的吧?

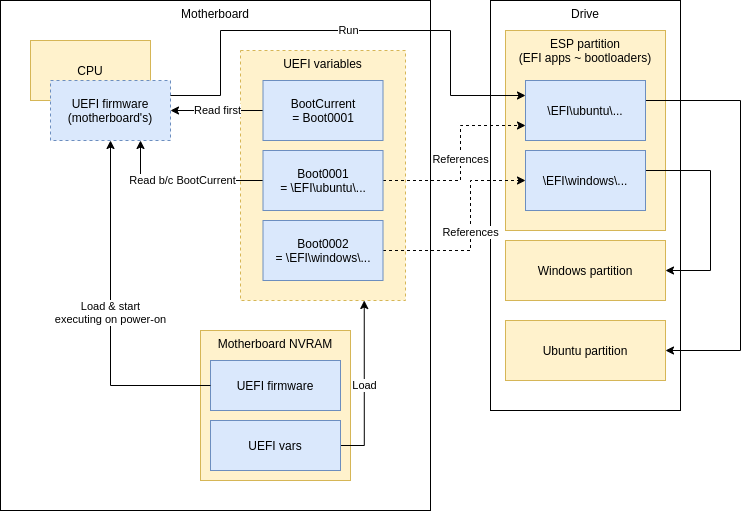

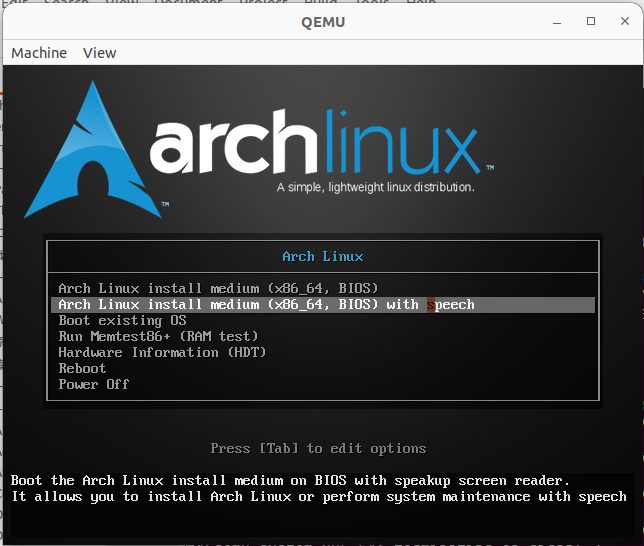

远比我想象的复杂,因为如何建立EFI-var这个就是一个大的问题。我当然不想搞乱我自己的系统吧!休息一下啊。

远比我想象的复杂,因为如何建立EFI-var这个就是一个大的问题。我当然不想搞乱我自己的系统吧!休息一下啊。

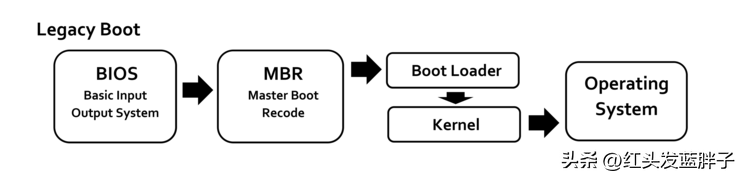

为了迅速理解,看看这个

为了迅速理解,看看这个

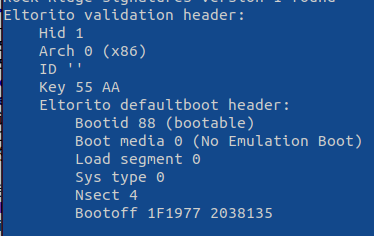

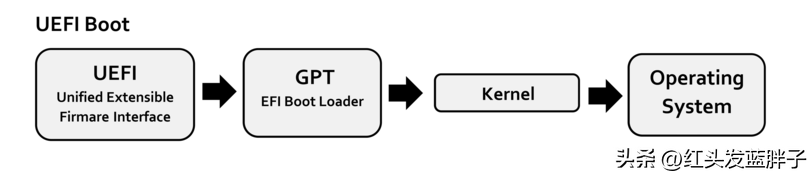

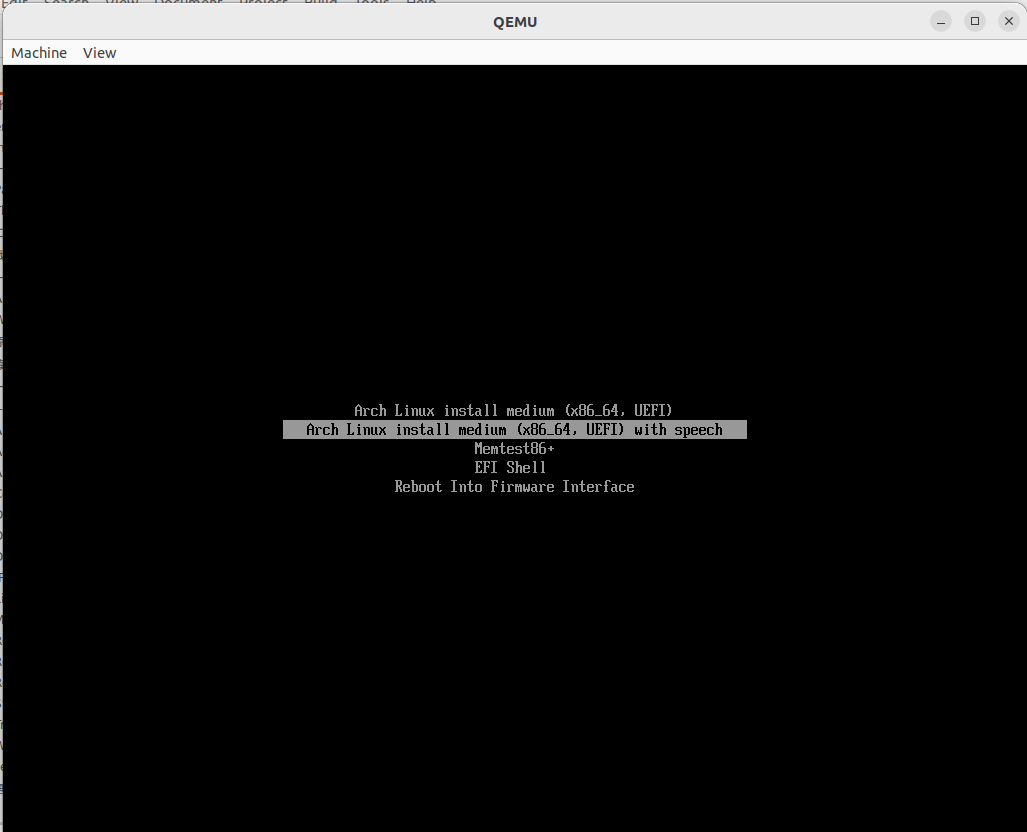

这个是uefi-boot的流程

这个是uefi-boot的流程

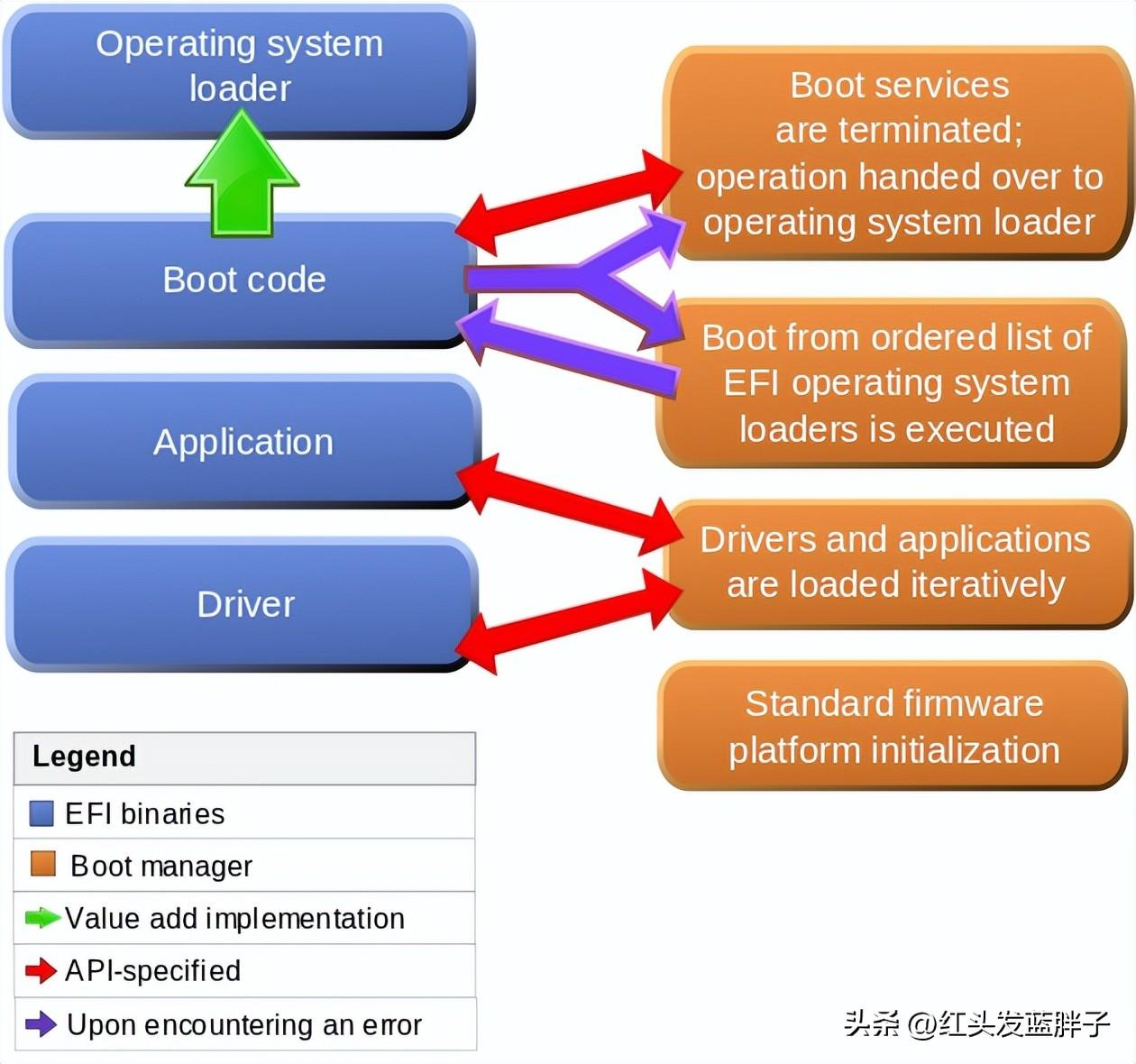

而这个图是我比较喜欢的uefi的具体流程

而这个图是我比较喜欢的uefi的具体流程

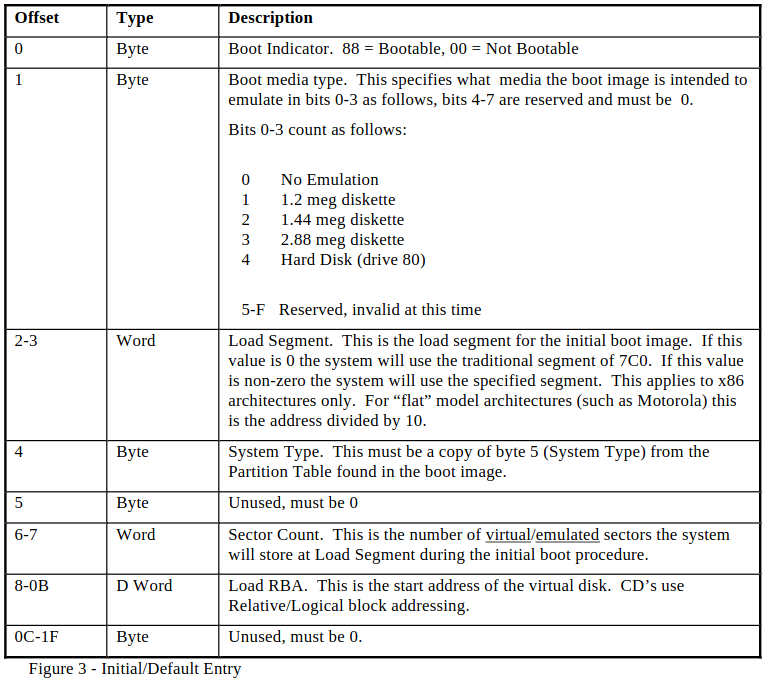

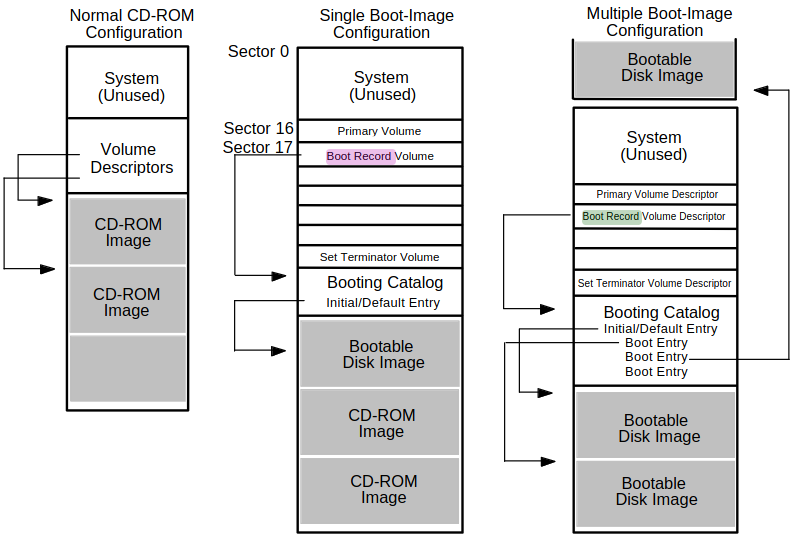

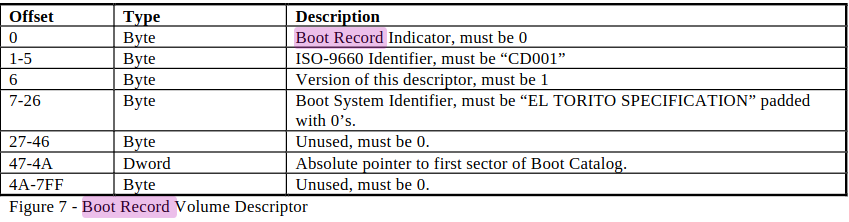

这个表一定是错的,偏移量的计算难道数数都不对吗?EL TORITO SPECIFICATION这几个字母加上空格怎么数都是23,而表里说是7-26的偏移,大概是在EL TORITO的小旅馆里喝多了吧?我怀疑是使用hex的结果,因为下面的boot catalogure的绝对地址就是十六进制!

这个表一定是错的,偏移量的计算难道数数都不对吗?EL TORITO SPECIFICATION这几个字母加上空格怎么数都是23,而表里说是7-26的偏移,大概是在EL TORITO的小旅馆里喝多了吧?我怀疑是使用hex的结果,因为下面的boot catalogure的绝对地址就是十六进制!

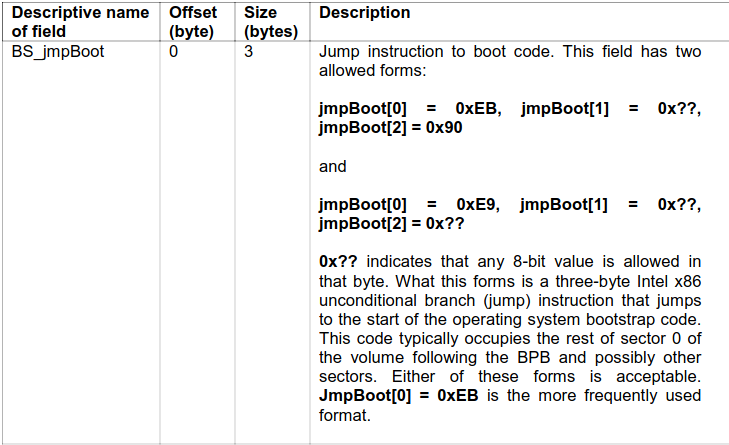

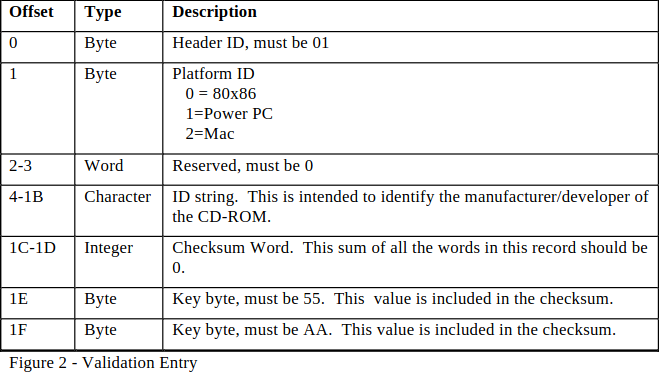

我对这里的定义也表示怀疑,到底谁是对的?为什么偏移1C-1D 不是应该0吗?

我对这里的定义也表示怀疑,到底谁是对的?为什么偏移1C-1D 不是应该0吗?